Do you want to contribute by writing guest posts on this blog?

Please contact us and send us a resume of previous articles that you have written.

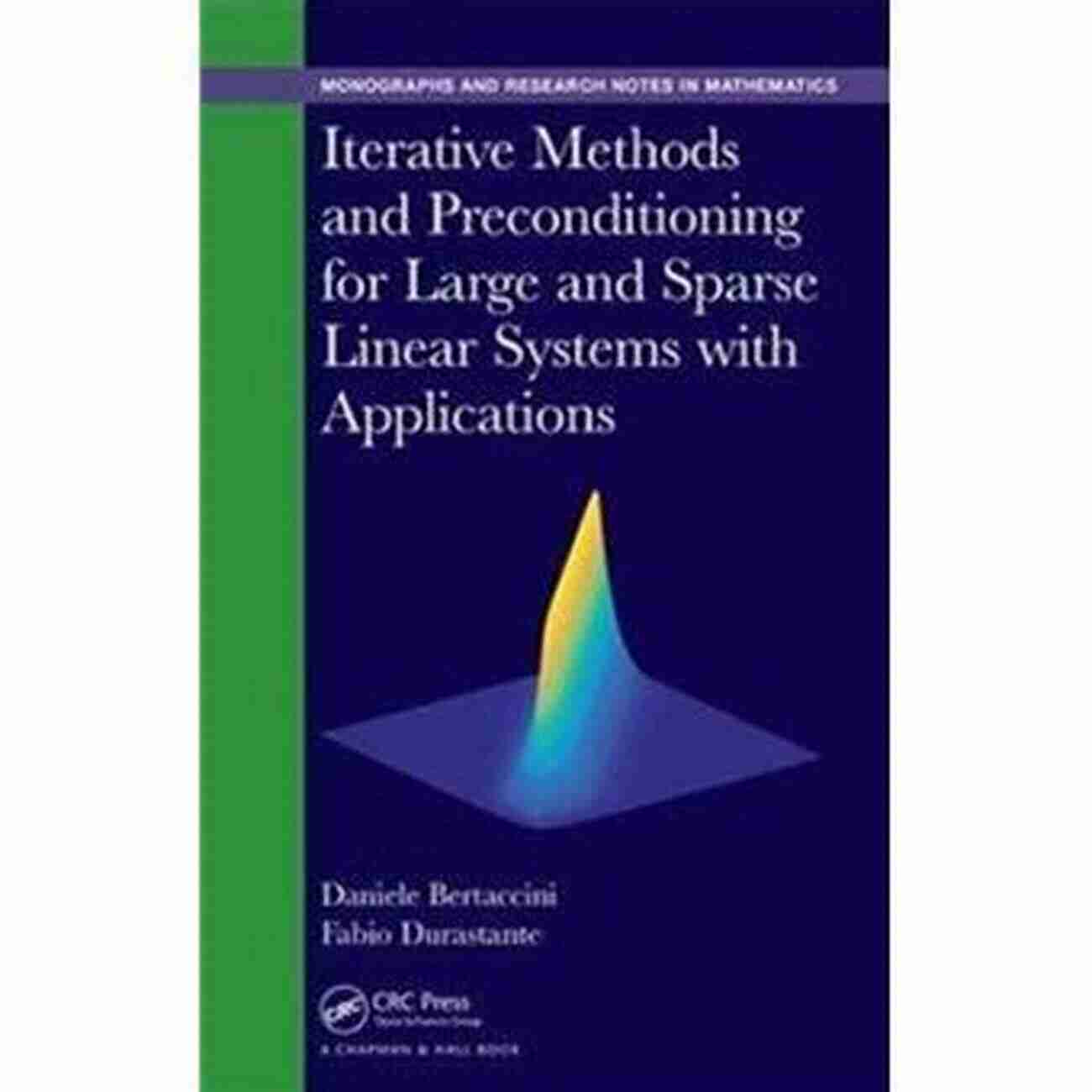

Iterative Methods And Preconditioning For Large And Sparse Linear Systems With

In the world of computational mathematics, solving large and sparse linear systems is a common problem that arises in various fields. Whether it be in physics, engineering, or computer science, these systems often require efficient and accurate solutions to provide insights into complex phenomena or to make predictions. Iterative methods and preconditioning techniques have proven to be valuable tools in tackling this challenge, offering improved speed and accuracy compared to direct methods.

When dealing with large and sparse linear systems, direct methods such as Gaussian elimination become computationally expensive due to their high memory requirements and the need to solve dense matrices. On the other hand, iterative methods divide the linear system into smaller sub-problems and solve them iteratively, converging towards the desired solution. This approach is more memory-efficient and allows for parallelization, making it suitable for solving systems with millions or even billions of unknowns.

One commonly used iterative method for large linear systems is the Conjugate Gradient (CG) method. It is an efficient and widely applicable algorithm that can solve symmetric positive definite systems. CG iteratively minimizes the residual, which is the difference between the current approximation and the true solution. By updating the solution based on this residual, CG improves its accuracy with each iteration until convergence is achieved.

5 out of 5

| Language | : | English |

| File size | : | 20648 KB |

| Text-to-Speech | : | Enabled |

| Screen Reader | : | Supported |

| Enhanced typesetting | : | Enabled |

| Print length | : | 232 pages |

Another popular iterative method is the Generalized Minimal Residual (GMRES) method, which can handle general non-symmetric systems. Instead of minimizing the residual like CG, GMRES minimizes the residual over a Krylov subspace. This allows for greater flexibility in solving various types of linear systems, albeit at the cost of increased computational complexity.

While iterative methods improve efficiency compared to direct methods, they may still require a large number of iterations to converge, especially when dealing with ill-conditioned systems. This is where preconditioning comes into play. Preconditioning techniques aim to transform the original linear system into a better-conditioned one, resulting in faster convergence of iterative solvers.

There are various approaches to preconditioning, depending on the system's characteristics and computational resources available. One popular method is the Incomplete LU (ILU) factorization, which approximates the original matrix by dropping certain elements, making it sparser and easier to invert. Another common technique is the Preconditioned Conjugate Gradient (PCG),which combines CG with a preconditioning step to further accelerate convergence.

In addition to the choice of iterative method and preconditioning technique, selecting an appropriate stopping criteria is crucial for efficient solution of large linear systems. The goal is to strike a balance between computational resources and solution accuracy. Various convergence criteria, such as a threshold on the residual norm or a maximum number of iterations, can be employed to ensure the iterative solver terminates in a reasonable time while still providing an accurate solution.

The development and optimization of iterative methods and preconditioning techniques have greatly benefited from advancements in parallel computing and numerical algorithms. Distributed memory systems, such as clusters or cloud computing platforms, allow for the parallel execution of iterative solvers across multiple nodes, significantly reducing the time required to solve large linear systems. Additionally, continuous research and improvements in numerical algorithms further enhance the performance and accuracy of these methods.

, iterative methods and preconditioning techniques are invaluable tools for solving large and sparse linear systems. They offer significant advantages over direct methods in terms of memory efficiency, parallelization, and solution accuracy. The Conjugate Gradient and Generalized Minimal Residual methods serve as popular iterative solvers, while techniques such as Incomplete LU factorization and Preconditioned Conjugate Gradient aid in faster convergence through preconditioning. Further advancements in parallel computing and numerical algorithms continue to push the boundaries of computational mathematics, making it possible to solve even larger and more complex linear systems efficiently.

5 out of 5

| Language | : | English |

| File size | : | 20648 KB |

| Text-to-Speech | : | Enabled |

| Screen Reader | : | Supported |

| Enhanced typesetting | : | Enabled |

| Print length | : | 232 pages |

This book describes, in a basic way, the most useful and effective iterative solvers and appropriate preconditioning techniques for some of the most important classes of large and sparse linear systems.

The solution of large and sparse linear systems is the most time-consuming part for most of the scientific computing simulations. Indeed, mathematical models become more and more accurate by including a greater volume of data, but this requires the solution of larger and harder algebraic systems. In recent years, research has focused on the efficient solution of large sparse and/or structured systems generated by the discretization of numerical models by using iterative solvers.

Howard Powell

Howard PowellUnmasking the Enigma: A Colliding World of Bartleby and...

When it comes to classic literary works,...

Jeffrey Cox

Jeffrey CoxCritical Digital Pedagogy Collection: Revolutionizing...

In today's rapidly evolving digital...

Quincy Ward

Quincy WardThe Diary Of Cruise Ship Speaker: An Unforgettable...

Embark on an incredible...

Derek Bell

Derek BellBest Rail Trails Illinois: Discover the Perfect Trails...

If you're an outdoor enthusiast looking...

Adrian Ward

Adrian WardChild Exploitation: A Historical Overview And Present...

Child exploitation is a...

Camden Mitchell

Camden MitchellThe Untold Story Of The 1909 Expedition To Find The...

Deep within the realms of legends and...

Spencer Powell

Spencer PowellThrough The Looking Glass - A Wonderland Adventure

Lewis Carroll,...

Sidney Cox

Sidney CoxAdvances In Food Producing Systems For Arid And Semiarid...

In the face of global warming and the...

Art Mitchell

Art MitchellThe Devil Chaplain: Exploring the Intriguing Duality of...

When it comes to the relationship between...

Edgar Hayes

Edgar HayesThe Mists of Time: Cassie and Mekore - Unraveling the...

Have you ever wondered what lies beyond...

John Steinbeck

John SteinbeckOn Trend: The Business of Forecasting The Future

Do you ever wonder what the future holds?...

Tim Reed

Tim ReedLove Hate Hotels Late Check Out

Have you ever experienced the joy of...

Light bulbAdvertise smarter! Our strategic ad space ensures maximum exposure. Reserve your spot today!

Brennan BlairThe First Time Solo Traveler Jenell Diegor: A Journey of Self-Discovery and...

Brennan BlairThe First Time Solo Traveler Jenell Diegor: A Journey of Self-Discovery and... Edgar Allan PoeFollow ·5.6k

Edgar Allan PoeFollow ·5.6k Bret MitchellFollow ·12.7k

Bret MitchellFollow ·12.7k Derek CookFollow ·16.3k

Derek CookFollow ·16.3k Demetrius CarterFollow ·7.2k

Demetrius CarterFollow ·7.2k Kendall WardFollow ·12.1k

Kendall WardFollow ·12.1k Greg CoxFollow ·18.9k

Greg CoxFollow ·18.9k Robert FrostFollow ·14.6k

Robert FrostFollow ·14.6k Quentin PowellFollow ·10.2k

Quentin PowellFollow ·10.2k